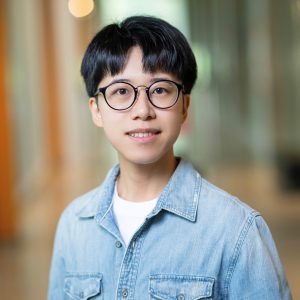

Congratulations to DMCBH member Dr. Xiaoxiao Li on her recent appointment as Canada Research Chair in Responsible Artificial Intelligence (AI). We spoke with Dr. Li, who is also an Assistant Professor in UBC’s Department of Electrical and Computer Engineering, about her research and what it means to build AI systems that are truly trustworthy.

Can AI in healthcare be trusted?

Artificial intelligence is rapidly transforming many aspects of our lives. From advanced language models like ChatGPT to self-driving vehicles and precision medicine, AI systems are increasingly making decisions that affect our lives. However, many AI models function as “black boxes,” forming predictions without revealing how they were made. In clinical settings, this opacity can undermine trust and compromise patient safety.

AI models are often trained on huge datasets to predict outcomes like cancer grade or treatment response. But their complexity can make their reasoning hard to follow, even for experts. When an algorithm suggests a diagnosis or treatment plan but can’t explain its reasoning, doctors may hesitate, or worse—follow it without question. In both scenarios, the lack of transparency compromises patient safety and accountability.

What is Responsible AI?

According to Dr. Li, responsible AI in medicine means earning the right to influence care by meeting four non-negotiable standards:

- Clinical utility: It demonstrably improves real-world decisions for patients, such as diagnosis or treatment.

- Equity and transparency: It performs consistently across demographic groups, exposes the factors behind each prediction and supports external audits.

- Privacy-centric security: It safeguards patient identities and resists attacks through techniques such as federated learning and differential privacy.

- Patient-oriented design: It is built with—and for—patients, providing explanations they can understand, honoring their values and consent and embedding feedback loops that let clinicians and patients report issues and see them fixed.

“If any pillar is missing, patients—not just papers—bear the cost through delayed diagnoses, unequal care or lost trust,” Dr. Li warns.

The Importance of AI Explainability

Explainability means showing clearly how an AI reaches its decisions—so humans can understand, verify and trust the results.

Dr. Li’s lab incorporates explainability at three levels:

- Design-time: Models are trained to reflect clinical reasoning and avoid shortcuts.

- Deployment: Each prediction includes a score and interpretable “receipts” like heatmaps or text triggers to help clinicians verify and document decisions.

- Auditing: Explainable outputs drive bias and safety monitoring across demographics, support drift detection and enable regulatory approval without reusing patient data.

“Explainability isn’t an afterthought in our AI models,” says Dr. Li, “It’s the hinge that lets a technically strong model become a clinically usable one.”

Safeguarding AI in Healthcare

At UBC, Dr. Li leads the Trusted and Efficient AI (TEA) Lab, where she focuses on transforming how healthcare data is used to build reliable and fair AI systems.

“We ask how we can turn the enormous—and often siloed—streams of clinical text, imaging, and sensor data into AI decision-making tools that are trustworthy, verifiable and equitable,” she explains.

To achieve this, the TEA Lab is building a federated learning platform that enables institutions to train AI models collaboratively while keeping patient data secure. And beyond measuring accuracy, her team evaluates whether each model can meaningfully improve treatment decisions, maintain security across its lifecycle and operate within strict carbon and compute budgets.

This vision drives an integrated research program that spans foundational model design to clinical testing. Key priorities include:

- Opening the black box: Tracing how models make decisions ensures they rely on valid medical features versus artefacts like scanner metadata.

- Protocol alignment: These models are fine-tuned with clinician feedback, ensuring that every recommendation mirrors an accepted care pathway.

- Clinical reasoning: Enabling models to articulate step-by-step arguments rather than spit out single labels, making AI decisions more trustworthy.

- Rigorous evaluation: Each model undergoes a full audit loop—tested for fairness across demographics, resilience to distribution shifts, resistance to privacy attacks, adherence to sustainability goals and above all, real-world clinical utility.

Through this meticulous process, Dr. Li and her team aim to deliver AI systems that clinicians trust, patients benefit from and regulators can confidently certify.

The Path to AI Research

“It began with a fascination for pattern-finding and automation,” says Dr. Li, on her journey to studying AI. “I turned toward medicine when I realized how many life-critical patterns hide beyond human perception.”

As an undergraduate research assistant at Harvard University, she worked on machine-learning models that flagged subtle abnormalities in brain MRI scans—early signs of Alzheimer’s disease that often go unnoticed until it’s too late.

“That project showed me two things,” Dr. Li recalls, “First, algorithms can surface clinically actionable signals long before symptoms become severe. Second, a single well-validated model can extend expert-level care to thousands of clinics that lack subspecialists, narrowing geographic and socioeconomic gaps.”

While completing her PhD at Yale University, she worked closely with radiologists, where she developed a clinic-first mindset, concluding that every model must solve an actual diagnostic problem. Later during her postdoctoral studies at Princeton University, she shifted to machine learning systems, discovering that data protection and computational efficiency must be co-designed from the very beginning, not a feature to be tackled later.

Now at UBC, with generous resources, a health network and a lab of eager students, Dr. Li has been able to scale up her research and leverage multi-center healthcare data for cancer diagnosis. Within machine learning networks like Vector and CAIDA, she works on fundamental machine learning problems to advance algorithms.

“Together, these experiences drive a lab culture that demands methodological rigour, cross-disciplinary problem framing and real-world deployment—indispensable pillars for trustworthy AI that truly reaches patients,” she concludes.

Life Outside the Lab

Outside of research, Dr. Li keeps both body and curiosity in motion. She enjoys tennis, working on her golf swing and trekking Vancouver’s North Shore trails. She’s also an avid amateur photographer.

“Photo walks sharpen my attention to subtle patterns and textures, a habit that carries into how I analyze data,” she says.

To unwind, she programs, reads and writes fiction.

Final Thoughts

With her vast experience in machine learning, Dr. Xiaoxiao Li is helping redefine what it means to build responsible AI in medicine. Through technical innovation and a deep commitment to equity, transparency and patient-centered design, her work is making AI not just smarter— but safer, more equitable and worthy of clinical trust.